Durability testing for advanced optical coatings beyond DLC is rarely a question of finding a “tougher” coating. It is a question of proving that a specific coating stack on a specific substrate will survive the contact, cleaning chemistry, humidity, temperature swings, and handling that the product will actually see.

Many coatings do not fail as dramatic peel-offs in early builds. They fail as subtle performance drift, reflectance shifts, increased haze or scatter, edge defects that grow after cycling, or cosmetic damage that appears only after repeated wiping.

If the test plan is not tied to real threats and measurable acceptance criteria, results can look reassuring while still failing to predict field behavior.

This guide lays out a practical way to qualify “beyond DLC” optical coatings. It clarifies which coating families are in scope, outlines the failure modes that matter, shows how to select tests and set severity, and closes with a sign-off checklist built around repeatable measurement and release-ready criteria.

Key Takeaways

“Durability” is a set of failure modes; qualification starts by defining threats, not coating names.

Select tests and severity based on real contact, cleaning chemistry, and environment—not generic “abrasion resistance.”

Witness samples are helpful early on, but sign-off often requires evidence from real parts or representative builds.

Pre/post optical measurements prevent “passed visually, failed optically” outcomes.

A durability plan is incomplete without acceptance thresholds, sampling logic, and a repeatable inspection method.

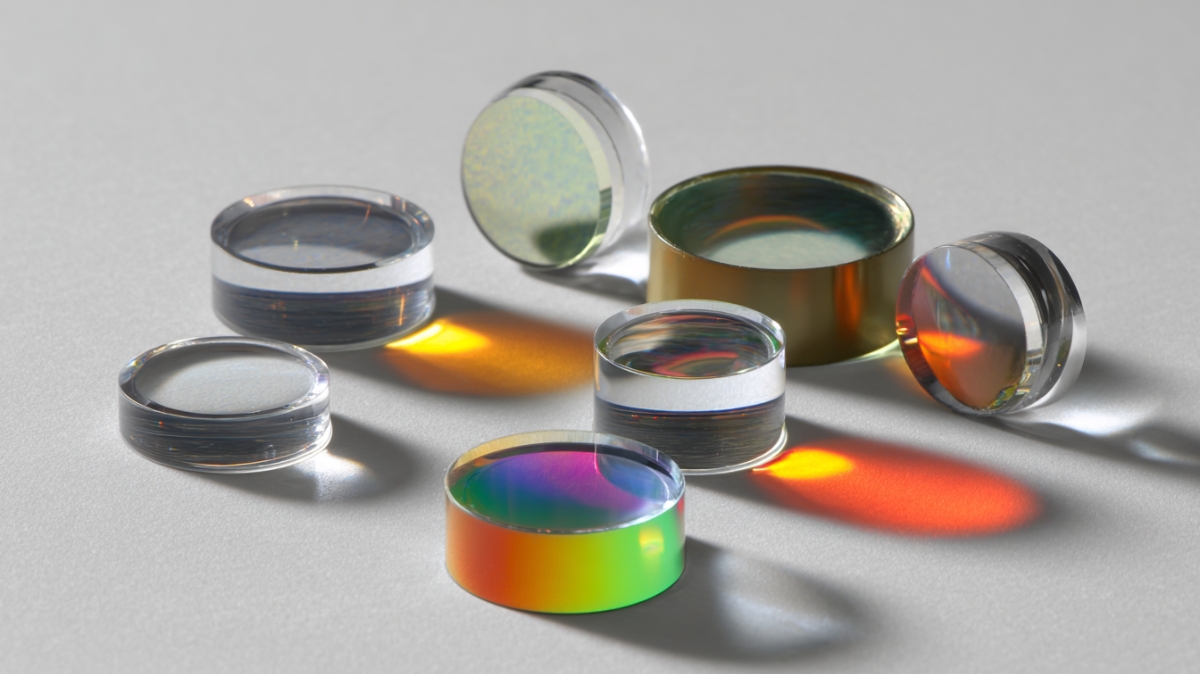

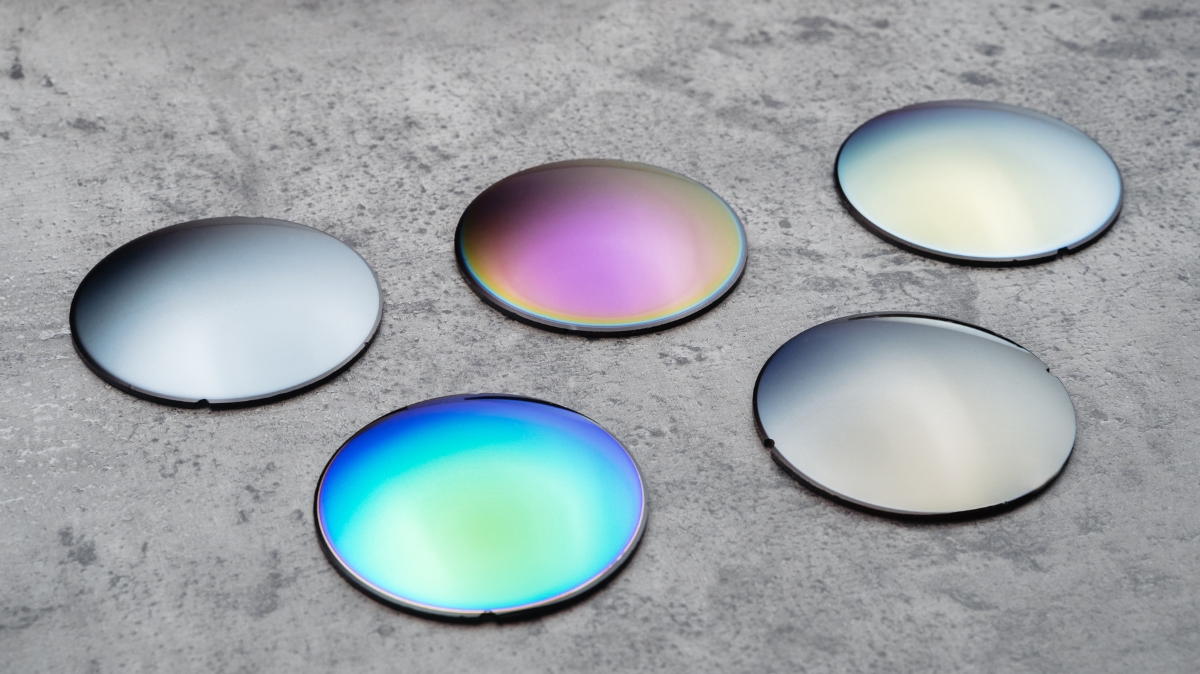

What “Beyond DLC” Coatings Include (AR, Mirrors, Filters, Protective Stacks)

When teams say they need durability “beyond DLC,” they are usually not asking for a single alternative coating. They are asking how to qualify the durability of optical thin-film stacks that deliver an optical function first, then survive real handling and environmental exposure.

In most programs, “beyond DLC” covers thin films designed for transmission and reflectance control, including:

Anti-reflective (AR) coatings for one or more wavelength bands

Reflective coatings (metallic and/or dielectric mirror stacks)

Filters (bandpass, longpass/shortpass, notch, or custom spectral shaping)

Beamsplitters and partially reflective stacks

Protective topcoats are applied to improve survivability without sacrificing optical performance

A useful correction at this stage is that “hardness” is not the durability strategy by itself. Durable behavior depends on how the full stack is designed, the substrate it is on, and what the optic will encounter in use, including wiping, particulates, humidity cycling, thermal exposure, cleaning chemistry, and packaging stresses.

This is why coating performance and coating durability cannot be qualified as separate conversations. A stack that meets spectral targets at time zero is not “qualified” until the same targets remain within limits after the relevant durability exposures.

That connection, optical function first, durability proven second, is what prevents a coating choice from becoming a late-stage integration problem.

Once coating types are clear, the next step is defining what “durability” must survive in your operating environment.

Coating Durability Failure Modes to Test For

Durability failures in optical coatings typically present as system degradation rather than catastrophic damage, scatter rise, wipe-driven cosmetic defects, or performance drift that appears only after cycling.

If failure modes are not defined up front, test plans often default to generic abrasion checks that miss the actual limiter.

Below are the failure modes that most commonly drive qualification decisions for advanced optical thin films.

Abrasion and handling damage

This includes more than abrasion in the lab sense. It is the cumulative effect of real contact: wiping during cleaning, incidental rubbing during assembly, contact with fixturing, and particulate drag.

The first symptom is often cosmetic marking or a subtle increase in haze/scatter rather than a dramatic scratch pattern.

Adhesion and delamination risk

Adhesion problems can appear as local peel, edge lift, or interlayer separation within a stack, especially after environmental exposure. Adhesion is not only “did it stick on day one,” but also whether it continues to adhere after humidity cycling, thermal cycling, and cleaning exposure.

Humidity and condensation effects

Moisture can drive haze formation, defect growth, or adhesion loss. Systems that see condensation cycles, high humidity storage, or repeated temperature transitions tend to expose weaknesses that are invisible in dry, room-temperature handling.

Thermal cycling effects

Thermal cycling loads the coating/substrate interface mechanically. Mismatch in thermal expansion can create stress that leads to micro-cracking, edge defects, or drift in optical performance. These issues often appear after repeated cycles, not immediately.

Chemical exposure

Cleaning chemistry and in-service contaminants are a common real-world durability limiter. Disinfectants, solvents, fuels, oils, or salt environments can attack the coating stack directly or weaken adhesion over time. Chemical compatibility must be treated as part of durability, not as a separate “materials” issue.

Optional stresses when the optic is exposed externally

If the optic is in an exposed environment, additional failure drivers may matter: wiper/rain erosion, UV exposure, sand/dust abrasion, and repeated surface contamination events. These are not universal requirements, but when they apply, they often dominate the durability plan.

With failure modes defined, you can build a test plan that targets them without over-testing or missing the real limiter.

How to Build a Coating Durability Test Plan

A durability plan is most effective when it is built from the outside in: start with the threats the optic will actually see, then select tests that reproduce those stresses in a controlled, repeatable way.

The goal is not to run every available test.

It is to run the smallest set that reliably exposes the failure modes that matter for your use case, at a severity level that is representative rather than arbitrary.

Minimal-set rule: Start with one exposure per dominant threat (contact, chemistry, humidity, thermal), plus one sequencing run that mirrors the real lifecycle.

Add additional tests only when a failure mode cannot be isolated, a customer/spec explicitly requires it, or the system risk justifies the extra evidence (e.g., safety-critical or high-cost field failure).

Build a Threat-to-Test Map and Define What “Pass” Means

Use the table below to map each real-world threat to a test class, the severity levers that actually change outcomes, and what to measure before and after exposure so every test is tied to a defined risk and produces sign-off evidence, not just reassurance.

Threat/exposure in the product | Test type (category) | Severity levers to tune | Evidence to capture (pre/post) | Common false pass to avoid |

|---|---|---|---|---|

Wiping and routine handling | Abrasion/wipe/handling simulation | Wipe material, applied load, cycle count, dry vs lubricated, particulate presence | Cosmetic mapping + photos; optical performance check tied to the function (e.g., spectral transmission/reflectance) | “Looks fine” after a few wipes, but develops haze/scatter or cosmetic growth after repeated cleaning |

Particulate drag/dust contact | Particle abrasion exposure | Particle type/size, surface condition, contact mode, cycles, cleaning method post-exposure | Defect mapping, pre/post optical check, note cleaning method used | Damage masked by aggressive cleaning or inspection done only under casual lighting |

Adhesion integrity under stress | Adhesion evaluation tied to exposures | Where assessed (center vs edges), exposure history (humidity/thermal/chemical), and sample geometry | Clear adhesion outcome documentation + location mapping; pre/post optical check if drift is possible | Adhesion checked only at time zero, not after cycling/chemistry that triggers loss |

Humidity storage/condensation cycles | Humidity exposure/condensation cycling | RH level, dwell duration, condensing vs non-condensing, transitions, temperature | Haze/scatter indication if relevant; defect mapping; pre/post optical check | Passing visually while optical performance drifts (especially in low-contrast systems) |

Temperature swings during operation | Thermal cycling | Temperature range, ramp rate, dwell times, cycle count, fixture constraint | Defect mapping (crack/edge growth); pre/post optical check; note fixture/contact points | Cycling on ideal coupons while real parts fail due to geometry, mounting stress, or edges |

Cleaning chemistry/disinfectants | Chemical resistance exposure (wipe/soak) | Chemistry selection, concentration, dwell time, exposure method, cycles, temperature | Pre/post optical check; cosmetic mapping; documentation of method (wipe vs soak) | Using a “representative” chemical name, but not the real concentration, dwell time, or wipe practice |

Fuels/oils/salt environments (if applicable) | Chemical + environmental exposure | Contaminant type, dwell, temperature, combined exposure with humidity/cycling | Defect mapping; pre/post optical check; note post-cleaning behavior | Testing a contaminant in isolation when the real field condition is combined (e.g., salt + humidity + wiping) |

External abrasion/erosion (if applicable) | Environmental abrasion/erosion | Exposure intensity, duration, particle load, impact conditions | Cosmetic mapping; pre/post optical check; functional check at worst-case angles if relevant | Assuming “abrasion resistance” covers erosion/rain/wiper effects without a representative exposure |

This mapping step keeps the plan defensible: every test exists because it represents a defined threat, and every result produces measurable evidence rather than reassurance.

Define Pass/Fail: Optical Drift Versus Cosmetic Drift

Durability qualification fails most often when the program relies on visual inspection alone. A coating can look acceptable and still drift optically in ways that matter to the system.

Before running exposures, document two pass/fail layers:

Optical performance criteria: the metric tied to the coating’s function (commonly spectral transmission/reflectance in the relevant band). Define allowable drift as a threshold, not a narrative description.

Cosmetic/defect criteria: what counts as a defect, where it is evaluated, and how it is recorded (defect maps and controlled photos, not informal “looks good” checks).

Scatter/haze behavior (only when relevant): if the system is contrast-limited or low-signal, include a repeatable haze/scatter assessment or proxy method rather than relying on appearance.

If you cannot measure pass/fail in a repeatable way, the plan is not a qualification plan, yet it is screening.

Set Severity by Tuning the Levers That Matter

Severity should be set by tuning the variables that control the failure mechanism, not by defaulting to the harshest version of a test. Focus on:

Contact + exposure dose: wipe material/load/particulates (or fixture constraint), plus cycle count, dwell time, and whether exposure is dry/lubricated or wipe/soak.

Chemistry + environment: actual chemistry and concentration (including pH/solvent type), and the temperature/humidity regime (including condensing vs non-condensing transitions).

A useful discipline is to define both a representative condition (routine use) and a margin condition (variability and misuse).

Sequence Tests to Reveal Real Mechanisms

Order matters because one exposure can change how another failure mode appears. Humidity and thermal cycling can weaken adhesion, which then changes abrasion response. Chemical exposure can alter surface behavior, changing how wiping damage presents.

Rather than treating tests as independent checkboxes, decide whether your use case requires:

Sequential exposure (to simulate lifecycle reality)

Isolated exposure (to pinpoint a suspected limiter)

In practice, plans often do both: isolate early to diagnose, then sequence later to confirm survivability.

Decide When Witness Samples Are Sufficient and When They Are Not

Witness samples are efficient for early screening and process monitoring, but they do not always represent real parts. Escalate to representative parts or builds when:

Edges, apertures, or features change coating behavior

Packaging stresses (mounting, windows, bonding) are part of the real environment

Cosmetic acceptance is tight, and surface handling is unavoidable

The substrate and finish on the part differ from the witness coupon

A plan that never touches representative geometry often produces results that are true for the coupon and wrong for the product.

Capture Evidence That Supports Sign-Off

Durability testing should produce more than a pass/fail statement. At minimum, capture:

Defect mapping and photos (where damage appears, how it evolves, whether it is localized or systematic)

Pre/post optical measurements tied to the optical function (so “looks fine” is not mistaken for “still meets performance”)

Notes on handling and fixturing that could explain artifacts and repeatability issues

This documentation becomes the bridge between test work and a release decision.

The same test plan can produce different outcomes depending on the substrate, especially on polymers, so the substrate must be addressed explicitly.

Polymer vs Glass: How Substrate Changes Durability Testing

A durability plan that ignores the substrate is incomplete. The same coating stack can behave very differently on glass versus polymer, not because the coating “changed,” but because the substrate changes the mechanical, thermal, and handling conditions the coating must survive.

If your product uses polymer optics, durability qualification must treat substrate behavior as a primary variable, not a footnote.

Why Do Polymers Behave Differently in Durability Testing

Compared with glass, polymers introduce constraints that can shift both failure modes and test outcomes:

Thermal limits + CTE mismatch: polymers typically have narrower temperature windows and larger expansion/contraction than glass, which can increase thermo-mechanical stress in the coating stack during cycling and change surface behavior over time.

Lower mar resistance: polymer surfaces are generally more prone to visible marking from wiping, particulate drag, and routine handling contact.

Cleaning sensitivity: chemistries and repeated cleaning actions that are benign on glass can cause surface damage, swelling, or cosmetic change on certain polymers.

Handling and fixturing effects: clamping, contact points, and assembly handling can introduce micro-damage or stress that becomes visible only after environmental exposure.

These differences do not automatically make polymers “harder to coat.” They make polymer durability qualification more dependent on representative surfaces and realistic handling.

Failure Risks That Tend to Rise in Polymer Optics

Programs commonly encounter three durability risks more often on polymers:

Abrasion sensitivity: small contact events can create cosmetic defects or haze that are unacceptable even if spectral performance remains nominal.

Adhesion edge cases: stresses from thermal cycling or surface preparation variability can expose adhesion weakness that does not appear on glass.

Visibility of cosmetic defects: minor marks that would be tolerated on glass can be far more visible on polymer parts, depending on geometry and surface finish.

The practical implication is that polymer durability plans must consider both optical performance drift and appearance-driven failure.

Practical Controls That Make Polymer Results Representative

Durability testing on polymers is most credible when the test articles and handling reflect production reality:

Use representative surface finish and molding state (not a “best-case” polished coupon if the product finish differs)

Control substrate preparation consistently and document it (cleaning, surface conditioning, handling)

Replicate assembly and handling steps that create contact risk (fixturing, protective films, packaging, cleaning method)

Plan a protection strategy early: how the optic is protected during assembly and how it is cleaned in service.

These controls prevent a common failure mode: a coating that “passes on coupons” but fails on parts due to surface, handling, or stress differences.

When to Escalate from Coupons to Real Parts for Sign-Off

Witness coupons remain valuable for early screening and process monitoring. Escalate to real parts, or representative builds when:

Geometry introduces edges, apertures, or features that change coating behavior

The part finish differs from the coupon finish

Packaging, bonding, or mounting stress is part of the real environment

Cosmetic appearance is a functional requirement, not a preference

If the product is a polymer and cleaned frequently, a sign-off that does not include representative parts is often the highest-risk gap.

Once substrate realities are included, the next question is what you will measure to define “pass” in a way production can repeat.

Qualification Checklist: What Must Be True Before You Sign Off

A coating is not “qualified” because it survived one exposure. It is qualified when the threat model, test plan, and acceptance criteria match how the part will be built, handled, cleaned, and measured so the result holds at scale.

1) The Threat Model Is Explicit

Confirm the plan reflects real exposures:

Contact and handling (assembly touch points, wiping, particulate contact)

Cleaning chemistry and method (what is used, how, how often; wipe vs soak)

Environment and cycling (humidity/condensation, temperature range, thermal cycling)

Lifecycle and external exposure (service duration, storage, dust/salt/UV/wiper erosion if applicable)

2) Representativeness Is Demonstrated

Confirm the test article and setup match production:

Correct substrate and representative surface finish

Production-representative stack/process state

Realistic fixturing/handling and contact points

Packaging influences included when relevant (windows/covers, mounts, bondlines)

3) Pass/Fail Criteria Are Measurable

Confirm acceptance can be repeated in the intended inspection flow:

Optical criteria based on the functional metric (pre/post-performance checks)

Cosmetic/defect criteria with documented rules (what counts, where, and how it’s recorded)

Adhesion outcomes with clear thresholds and documentation expectations

4) Sampling and Repeatability Are Defined

Confirm the plan supports release confidence, not one-off success:

What gets tested (coupons, representative parts, or both) and why

Sample size and lot/build coverage (single lot vs multiple lots/builds)

Allowed variation and how drift is reported (what is meaningful vs noise)

5) Requalification Triggers Are Set Before Release

Confirm the program knows what changes invalidate the qualification:

Substrate/material or prep changes

Stack/process/deposition changes

Cleaning chemistry/method changes (chemical, concentration, wipe material, frequency)

Packaging changes that alter stress or exposure (mounting, window, bonding, sealing)

If gaps show up here, especially around repeatability on real parts, polymer behavior, or acceptance criteria that are hard to measure consistently, that is typically where a manufacturing-led qualification review prevents rework after integration.

Closing the Gap Between Coupon Testing and Real-Part Sign-Off

Apollo Optical Systems supports durability qualification beyond DLC by keeping the work tied to production reality rather than coupon-only confidence:

Translating your threat model handling, cleaning chemistry, humidity/thermal exposure into a durability test plan that reflects how the optic is actually built, fixtured, cleaned, and used.

Planning coating approaches for polymer optics where substrate limits, thermal behavior, and handling steps often determine whether a “durable” stack stays durable in service.

Aligning qualification with verification by defining what should be proven on witness samples versus what must be proven on representative parts, and which pre-/post-optical checks provide release-ready evidence.

Maintaining continuity from prototype evaluation to production repeatability so the coating stack you qualify remains stable as scale builds.

Use the threat-to-test table and sign-off checklist above to draft a one-page qualification brief.

If you want a second set of eyes on whether the plan is measurable and production-representative, especially on polymer optics, Apollo Optical Systems can review the approach before you lock the coating stack.

Conclusion

Durability testing beyond DLC is a qualification problem, not a branding decision. The reliable path is to start with the threats the optic must survive, select tests and severity that reflect real handling, cleaning chemistry, and environmental exposure, and define acceptance criteria that catch optical drift as well as visible damage.

When the plan is substrate-aware, especially for polymer optics, and the pass/fail metrics are measurable in the production test flow, durability becomes a controlled sign-off decision rather than a problem discovered after integration.

FAQs

What tests are used to verify optical coating durability?

Durability qualification typically evaluates abrasion/handling damage, adhesion integrity, humidity exposure, thermal cycling response, and chemical resistance. The right combination depends on how the optic is handled, cleaned, and exposed in service.

Should durability testing be done on witness samples or real optical parts?

Witness samples are useful for early screening and process monitoring. For sign-off, many programs require representative parts or builds because substrate behavior, edges, surface finish, and packaging stress can change outcomes.

How do you choose abrasion test severity for optical coatings?

Severity should be tied to real contact conditions: wipe material, expected cycle count, particulate presence, contact pressure, and cleaning method. Over-severe testing can reject viable stacks; under-testing shifts risk into the field.

What should be measured before and after durability testing?

Record the optical performance tied to system requirements (often spectral transmission/reflectance) and document cosmetic defects consistently. If haze or scatter affects performance, include a repeatable haze/scatter evaluation before and after exposure.

Why do coatings fail after humidity or thermal cycling?

Moisture and temperature swings can expose adhesion weaknesses and increase thermo-mechanical stress, especially when coating and substrate expansion differ. Failures may show up as delamination, micro-cracking, haze, or performance drift.